A few months ago I successfully deployed and configured an Ubuntu 12.04 LTS Server Edition for the purposes of installing Nagios and doing on-site monitoring for key servers. Yesterday I did a bunch of security and hotfix updates to the server, since it was VERY behind (talking >20 security updates and what not) on a lot of packages. Following a reboot (I know, I know, not necessary but I’m still firmly rooted in the land of Windows where updates and reboots go hand in hand; for shame) I noticed a surge of alerts from Nagios and was thoroughly annoyed to see that the load on the server was consistently way too high – I was getting Warning / Critical alerts nearly every 15 minutes. Uh oh!

***** Nagios *****

Notification Type: PROBLEM

Service: Current Load

Host: localhost

Host Alias: localhost

Address: 127.0.0.1

State: CRITICALDate/Time: Wed Dec 4 23:48:58 EST 2013

Additional Info:

CRITICAL – load average: 5.89, 4.95, 4.04

Status Details: https://nagios/nagios/cgi-bin/extinfo.cgi?type=1&host=localhost

This continued for a day until I got fed up with it. I told Nagios to stop paying attention to that service and to ignore it completely. I basically shit-canned the project and ignored it for about 24 hours until I realized it was going to be a slow day today. So I SSH into the box and load up top, and I wait. I wait and I wait and I wait. Every 15 minutes I would see a surge of spawned processes for ping, check_snmp, and a few other ones. I started to get a clear picture of what was going on.

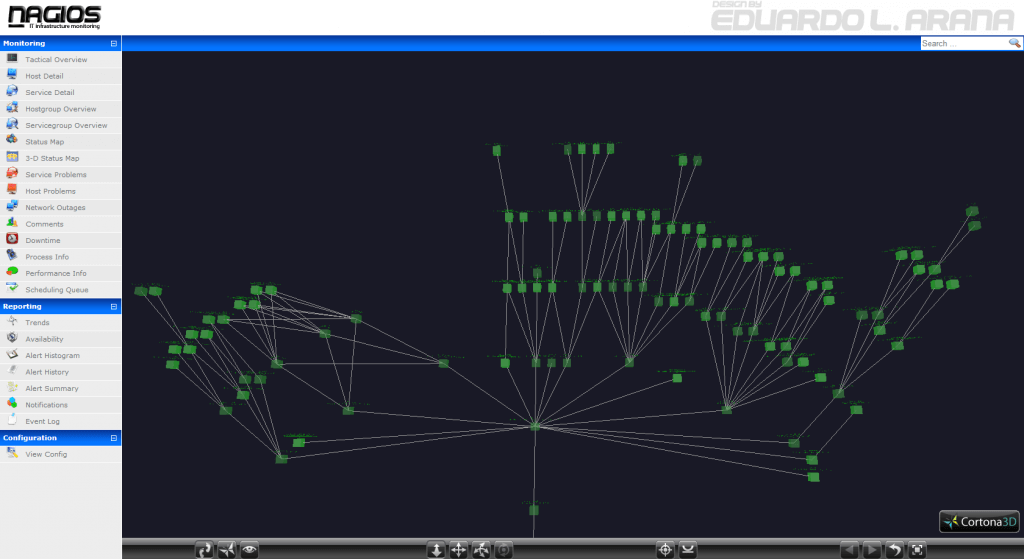

The reboot of Nagios reset the next check period to be the same for all devices, which was a big problem. We have 360+ devices in our monitoring scheme, and of that nearly 760+ services being monitored (with more coming in the future, I need to setup Windows device monitoring for our DCs and file servers). They were all trying to run at the same time. All 760. This isn’t a ridiculously beefy server we’re talking about here.

srv-nagios: Virtual Machine Details

VMware Virtual Platform

Intel(R) Xeon(R) CPU E5-2650 0 @ 2.00GHz

1GB RAM

20GB SCSI Virtual HDD

So after sitting on it a bit I decided to do some research and found that I could limit the number of simultaneous checks in Nagios. This is of course noted in the documentation. I must have glossed over it.

| Maximum Concurrent Service Checks |

| Format: | max_concurrent_checks=<max_checks> |

| Example: | max_concurrent_checks=20 |

This option allows you to specify the maximum number of service checks that can be run in parallel at any given time. Specifying a value of 1 for this variable essentially prevents any service checks from being run in parallel. Specifying a value of 0 (the default) does not place any restrictions on the number of concurrent checks. You’ll have to modify this value based on the system resources you have available on the machine that runs Nagios, as it directly affects the maximum load that will be imposed on the system (processor utilization, memory, etc.). More information on how to estimate how many concurrent checks you should allow can be found here.

Our setting was of course 0, meaning that Nagios tried to run as many checks as it wanted at the same time. After thinking on it a bit, I figured out what I wanted to do. We have 760 someodd checks. We have 15 minute intervals. I did 760/15 and it came out to be about 51 checks per minute. I started there. I set max_concurrent_checks to 51 and BAM load immediately dropped down to a more stable level.

OK – load average: 0.00, 0.13, 0.19

As I add more devices and services to Nagios I will tweak the value. Checking 60 things at a time should be easy enough to handle, it works out to about 1 a second and since the vast majority of my checks and services are pings and simple snmp queries it shouldn’t be too bad.

Here’s hoping.

A happy Nagios is a happy Mike.