Today on the way home from work I was listening to the radio (NJ 101.5) discussion about the proposal to change the minimum wage in NJ from $8.38/hour to $10.10/hour. The question presented was whether or not this made sense for NJ. I sat in my car thinking, who on earth could possibly be opposed to giving people who are working any form of job a little extra money to help make their lives a little easier.

Boy, oh boy, did I sorely overestimate the people of NJ on this one. The calls were (overwhelming) against it with the standard cry being “How can small business afford to pay their employees anything more than what they’re currently being paid?” – to which my response is: what? A friend pointed out that businesses can deduct payroll as tax exempt, potentially saving on the difference in cost. This is a valid point to consider, but I am taking a more person-oriented approach to this as a problem.

I am starting with a few base assumptions here:

- No student loans.

- No credit card debt.

- No car payment (beyond insurance).

- No TV/Internet/Phone Line for home use.

- This would likely result in higher cell-phone data use charges. This is not factored into the calculations.

- Not a realistic rent (for even a 1 Bed Room rental in a larger apartment; good luck finding $500/month rent in NJ).

- Not really the best in terms of monthly groceries (lots of fast foods and/or unhealthy but calorie-dense foods).

- Cell Phone

- Please do not tell me that a cell phone of SOME kind is not a necessity in this day and age. And if you’re going to suggest it, then I suggest you turn your phone off for a full day, let alone a week, let alone an entire month, and god forbid the entire damn year.

- Health Insurance (hourly employees are typically not granted insurance via employer, and the alternative is to take a tax penalty at the end of the year).

- Doing anything other than surviving every month; no entertainment, no hanging out with friends anywhere other than at home (or mooching off of friends for everything). This is as bare-bones as I can think.

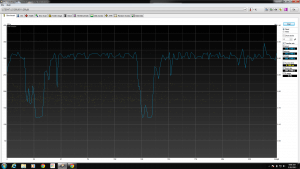

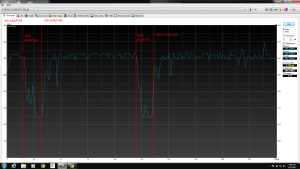

I came out with the following numbers (and I will post a copy of the sheet for you to look at if you wish):

For someone facing:

- $500/month rent

- $75/month power/gas

- $275/month groceries

- $40/month cell phone

- $65/month car insurance

- $100/month health insurance

- $100/month gasoline purely for travel to/from work

They would need to work:

- At $8.38 / hour

- 34.5 hours / week NOT factoring in taxes

- 36.5 hours / week factoring in just federal taxes

- does not factor in state taxes, unemployment insurance, etc, etc, etc.

- At $10.10 / hour

- 28.5 hours / week NOT factoring in taxes

- 30.5 hours / week factoring in just federal taxes

- At $13.50 / hour (what I would probably need to pay for my current living expenses)

- 21.5 hours / week NOT factoring in taxes

- 22.5 hours / week factoring in just federal taxes

Now, let’s examine my living expenses:

- $650/month rent

- $125/month power/gas

- $400/month groceries

- $50/month cell phone

- $78/month insurance

- $88/month TV/Internet/Land Line

- $225/month insurance (this is taken out of my salary currently)

- $275/month gasoline

I would need to work:

- At $8.38 / hour

- 56 hours / week NOT factoring in taxes

- 64.75 hours / week factoring in just federal taxes

- At $10.10 / hour

- 46.5 hours / week NOT factoring in taxes

- 53.5 hours / week factoring in just federal taxes

- At $13.50 / hour

- 34.75 hours / week NOT factoring in taxes

- 40 hours / week factoring in just federal taxes

- This is why I use $13.50 / hour

So, clearly, there’s room for improvement here. These numbers are all experimental guess work numbers, but I think you can see that that small bump in wages can make a minimum wage earner’s life MUCH easier. At very little overall cost. Well worth it in my opinion.

Have a heart, you cold bastards, and try to put yourself in their shoes for a goddamn minute before you try and discredit a decent idea.

You can view the sheet I used to calculate these numbers here.

-M, out